Query By Example by Meredith Patterson was one of this year's Google Summer of Code projects, and of all the projects I've looked at it seems the most exciting. Originally I wanted to wait for some more information about the project before writing about it, but as there weren't any news save some quiet early releases, and as I really need to clear my backlog of topics, I decided to have an early look.

Query By Example sets out to get rid of a current limitation of relational databases: the lack of support for fuzzy searches. Here's the short project description:

Query By Example enables intuitive, qualitative queries in PostgreSQL 8.0 and later -- provide a few sample data points, and let the database find similar rows for you.

I should disclaim that I'm not a specialist for database theory, but as an application developer I've had more than one situation where I ended up loading data sets in order to do a computation to decide which data sets to actually load, and Meredith's approach to remove this detour sounds interesting.

So What's This Used For?

If you now think "Nice, but why would anyone need this functionality?" then you haven't been paying attention. Look around you: nearly all of the most exciting services that are currently out there build upon notions of similarity. Similarity algorithms can be a great tool to navigate our ever-growing sets of data, regardless of the context. Recommendation engines build on similarity.

Take Last.fm and Pandora, two audio streaming sites that attempt to cater to your musical taste by cleverly automating the selection of content -- you provide the services with a (very short) list of musical artists you like, and they create a never-ending stream of audio pleasure, most of which will be a good approximation of what you asked for. The music selection will gradually move within the coordinate system of pop culture that you defined with your query, and just in case you have some time to waste there are means to adjust and refine the selection process. This is the most obvious application of a recommendation engine, and variations of this theme abound all over the 'net.

Other services attempt to connect people that might fit well together, be it that they have similar taste or similar histories. Firefly is one of the earliest (if not the earliest) Internet service that attempted to do that on a large scale, and the current incarnations of this idea are plentiful. I'm not too versed in these kinds of applications so I won't attempt to find interesting links, but you can easily find them yourself. Think dating sites.

And of course there's the modern classic of similarity searches: Amazon's "people who bought this also bought..."

How Does QBE Fit In There?

The way recommendation algorithms work usually results in database access patterns that are quite inefficient. A recommendation algorithm tries to find similarities between data items, and for that it needs an awareness of the complete data set and the relationship between its entities.

The most basic form of a recommendation algorithm selects all data sets from a database, does some computation in order to extract a small subset of the data, and discards the rest. Depending on the size of your database this can quickly become a performance problem, as you have a lot of communication with your database layer for the comparatively little amount of data you actually end up using; and as recommendation engines are typically interesting for huge sets of data (e.g. the complete Amazon book catalog) this becomes a real performance bottleneck. Also don't forget that all database communication might effectively be done over a network, which adds additional latency.

This is a problem for many modern systems that help navigate data sets. When two friends and me built a simple recommendation engine in 2001 we were faced with this exact problem, and as this was a one-off project with limited scope we ended up using this very approach: select the whole database in order to do a really simple computation that couldn't be done in the database itself, all just to get to a small subset of all data. It's obvious that this doesn't scale. (If you're interested to hear more about the project there are some notes in an earlier article about Pandora.)

Of course there are algorithmic workarounds to this disparity of requested data and data that is actually wanted -- starting from caching algorithms and indexes, and ultimately employing sophisticated application logic -- but these are all ways to construct a complex system to solve a relatively simple problem.

So instead of reinventing the wheel over and over it's usually a good idea to find common algorithms and build them into our basic infrastructure, and the database layer lends itself to that in a very obvious way. After all, all you wanted to do was select a subset of your database -- so if you manage to embed your selection mechanism into the database layer you save a lot of unnecessary traffic.

It's actually a mystery that it took so long for an approach like this to surface. Database experts can probably give a whole list of reasons why you don't want to do that, and why custom selection mechanisms are really to be regarded as application logic and hence to be separated from data storage, or whatever it is they worry about (and they must be worried, or why else would it be that nobody else seems to be implementing this) -- but to me it makes a lot of sense, and I'd rather have more powerful selection mechanisms than use stored procedures.

This project is only the lone forerunner of an area of database design that ultimately will be huge. The demand is just too apparent. Everybody will want this.

Enough of the Ranting, Let's See Some Code

Yeah, let's see code...

What can I say without disappointing you -- there is no public documentation that I can find, and the project's single download is a patch for the PostgreSQL code which is (understandably) rather hard to read, and it's even more hard to deduce the actual SQL syntax and selection mechanism, so I don't know what the approach actually looks like. But the last release was in early December, so I'm quite optimistic that we'll see some documentation sooner or later.

Yet after all the build-up I don't want to disappoint you; what follows are sketches of my attempt to trace the subject.

The project makes use of the Kernel-Machine Library by Rutger ter Borg. Kernel Machines are a method of statistical classification in statistical learning theory: a small set of examples in a set of data is labeled manually in a training phase, and using this training data the system creates hyperplanes that attempt to split all items based on their values into two classes (yes and no). Interested parties might have a look at kernel-machines.org for more detailed information, but beware: this is mostly a collection of papers, and thus not an introduction friendly to newcomers. There also is a related Wikipedia article on Support Vector Machines which is just as dense, but might serve as a starting point to browse around.

An entry in the project's list of tasks suggests that there are indeed a training and an execution stage. Another entry suggests that there will be ranking, which I take to be a measure of similarity, and which is a deviation from common support vector machines. The latter tracker entry also indicates how example data sets might be embedded in actual SQL code (here in the form of ORDER BY EXAMPLE).

The PostgreSQL patch contains some hints towards the SQL syntax. If you know a little PostgreSQL and can read YACC then download the patch and search for changes to ./src/backend/parser/gram.y. The SQL keywords and syntax fragments introduced by the patch read interesting, at least as far as you can make out by reading a patch. There are statements for both classification and ranking of entities using linear, gaussian and polynomian kernel types.

And there even is a little SQL at the very end of the patch which might give you a small preview. First a standard table definition:

CREATE TABLE pos_symmetry ( pkey int, a float4, b float4, c float4, d float4, e float4, f float4, g float4, h float4, i float4, j float4, k float4, l float4, m float4 );

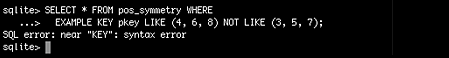

And then the actual query:

SELECT * FROM pos_symmetry WHERE EXAMPLE KEY pkey LIKE (4, 6, 8) NOT LIKE (3, 5, 7);

When Will My Database Support This?

I really don't know. This is at an early stage, and it's a one-woman project. I have no idea what database "vendors" think of these kind of approaches.

It is maybe unnecessary to point out that this of course is nonstandard SQL. It is also quite safe to assume that unless there is a miracle we won't have standardized fuzzy SELECT statements for at least a decade, unless someone is willing to step forward and make a first grand implementation (looks like this might be PostgreSQL?), and some else is willing to accept the challenge and build on it without being too creative, thus creating something like a quasi-standard (please, please let is be SQLite...)

But those are dreams.

|

Comments

Update: Meredith tells me there is a little documentation at http://pgfoundry.org/docman/view.php/1000137/83/howto.html

martin, 2005-12-18 23:29 CET (+0100) Link

You write "It's actually a mystery that it took so long for an approach like this to surface." Perhaps it is because the approach is actually quite old. Hats off to Meredith Patterson for implementing it for Postgresql but Query by Example is so old it gets extended treatment in Jeffrey D. Ullmans's _Principles of Database Systems_, Copyright 1980. The short of it is that Query by Example (QBE) was developed by IBM in the 1970's as a specialized language for use with sceen forms. Some versions of query by example or equivalents where included in data base systems of the 1980's like Unify. For that matter, Unify's non-standard dialect of SQL includes quite similar functionality. Once again, mdi 1980's technology.

The details of the implementation and the effort to make it efficent are worthy of praise.

lmh@world.std.com, 2006-02-13 22:49 CET (+0100) Link

Hey,

thanks for the comment, and for the background info -- looks quite interesting. I don't know if Meredith has looked into that material, but I'm a bit relieved to hear that there has been more research into non-mainstream areas than the current state of database systems suggests.

Which makes it even more strange that database system development today is such a conservative playing field, with apparently little room for original ideas.

Martin Dittus, 2006-02-13 22:58 CET (+0100) Link

Comments are closed. You can contact me instead.